Speech and communication skills are integral to independence and well-being. As technology advances, speech therapists are using new and innovative ways to incorporate it into therapy sessions. Read more about three projects that synthesize technological advances and speech therapy.

Kaspar the robot: helping children with autism communicate

Meet Kaspar: he can be talked to, tickled, stroked, played with and you can even prod and poke him and he won’t run away. Kaspar, developed at the University of Hertfordshire by a team under professor Kerstin Dautenhahn, is a child-like talking robot with a simplified human face and moveable limbs and features. He’s designed to help children with autism develop essential social skills through games such as peekaboo and learning activities.

Kaspar, the size of a small child, was “born” back in 2005 and has been developed since thanks to funding raised by the university. The multi-disciplinary scope of the project, spanning robotics, psychology, assistive technology and autism therapy, harnesses technology to assist communication. But this broad approach means it falls between research council stools and misses out on their grants, says Dautenhahn.

Initially, Kaspar has been used to help children in schools under the supervision of researchers. In the latest phase of the research, redesigned, wireless and more personalised versions of the little robot – controlled using a tablet – will to go out directly to schools and families in the next few weeks.

Parents and teachers will play games such as encouraging autistic youngsters to mimic and discuss different facial expressions, or even to pinch him and discuss why he cries out and looks sad, recording the results for the Hertfordshire team to analyse. “This is a new field study phase where Kaspar will go out into the world without the helping hand of researchers,” says Dautenhahn, whose work has combined both academic research – including collaboration with psychologists and clinicians – and the nuts and bolts of developing the robot as a potential mass product.

It is Kaspar’s highly predictable, simplified interactions that appeal to autistic children who may be overwhelmed by the complexity of everyday human communication, she believes.

“His simplicity appeals to children, and the fact that they can respond to him in their own time. If you are silent for 60 seconds, Kaspar won’t mind – he doesn’t make judgments.”

Alongside analysis of the new phase results, Dautenhahn and her team are seeking an investor to help give wider access to Kaspar at an affordable cost for schools and families.

Computers helping stroke patients with chronic speech impairments

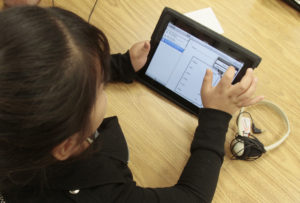

For stroke sufferers, damage to speech can be a common and debilitating consequence, often requiring long periods of therapy. A new computerised treatment developed at Sheffield University and now used by healthcare teams in a range of countries helps patients treat themselves at home by following a carefully-staged programme designed to gradually rebuild speech and the layers of connections that lie beneath it.

High-intensity stimulation through images, words and sounds is given to kick-start damaged nerve systems

The research, led by Sandra Whiteside and professor Rosemary Varley (now at UCL), rests on a new and controversial understanding of the way speech works. While traditional theories said speech output was put together sound by sound, the two academics developed an alternative model, arguing that speech in fact depends on plans stored in our brains for words and whole phrases.

Based on this theory, the women devised a computerised treatment – Sheffield Word, known as Sword – giving stroke and brain damage patients high-intensity stimulation through images, words and sounds to try to kick-start damaged nerve systems and get them speaking again.

“We are trying to connect up the whole system,” says Whiteside. “Rather than just focusing on getting people to speak from the outside, you are training up their perceptive system, bombarding it with all kinds of stimuli before you get them to produce speech.”

A patient using the software might start by hearing a word, such as “cat”, and matching it with an image from a choice of four on screen. A subsequent stage would see them imagining the sound of the word, then later imagining producing that sound. “Speech, the last tough hurdle, comes right at the end,” Whiteside explains.

With results showing significant improvements for patients with chronic speech impairments, the Sheffield team are now mining the data from a Bupa-funded study of 50 patients. Funding for this research area has “always been very difficult”, says Whiteside, but over 200 copies of the programme have also been sold, mainly to NHS trusts.

When humans listen to speech, we’re extraordinarily forgiving: we’ll make out words against the sound of crashing waves or engine noise, filter out a single voice amid the hubbub of a football crowd and cope with unfamiliar accents and rushed or emotional speech.

While giant leaps have been made both in speech recognition technologies (converting audio to text) and speech synthesis over recent decades, machines have yet to catch up with our ability to interact fast and naturally, constantly learning and adapting as we do so.

Research being conducted by scientists at the universities of Cambridge, Edinburgh and Sheffield seeks to shrink the performance gap between machine and human, aiming to make speech technologies more usable and natural. The five-year, $8m project funded by the Engineering and Physical Sciences Research Council is intended to develop a new generation of the technology, opening up a wide range of potential new applications.

“We want to take the idea of adaptation in speech technology and take it significantly further than it has ever been used before,” says professor Phil Woodland of Cambridge’s department of engineering. Currently, Woodland explains, machines – which work by breaking down speech into sounds and calculating the probabilities of likely words and sentences – have required significant amounts of data from individual speakers in order to learn sufficiently to recognise their speech, entailing a long process of “training”.

“Our aim now is to improve the technology to allow rapid adaptation and unsupervised or lightly supervised training,” says Woodland, whose team have worked with a range of industry partners including Google, Nuance and IBM. “We want to leverage data from different situations.”

Thanks to the BBC, the researchers have been able to test their work on several months of the broadcaster’s output. While transcribing clearly articulated news reports is straightforward, piecing together speech in an episode of Dr Who – with its unearthly noises and music – or fast, excited commentary in sports programmes is more challenging.

The same technology is also being used by the team to develop personalised speech synthesis systems, designed to reflect a user’s individual accent and expressions.

Source: The Guardian